Rogue We study many intresting properties in reinforcement learning in Rogue, including limited observation, exploration in maze, survival, and generalization.

Kanagawa, Y. and T. Kaneko “Rogue-Gym: A New Challenge for Generalization in Reinforce- ment Learning,” in IEEE Conference on Games (CoG), pp. 1–8 (2019), DOI: 10.1109/CIG.2019.8848075 https://github.com/kngwyu/rogue-gym Catan Catan is a multi-player imperfect-information game, involving negotiation among players.

Gendre, Q. and T. Kaneko “Playing Catan with Cross-Dimensional Neural Network,” in ICONIP, pp.

Reinforcement Learning in Half Field Offense Half field offense RoboCup 2D Soccer (HFO) is a subtask in RoboCup simulated soccer. Reinforcement learning in HFO is a challenging research topic. We developed hierarchical advantage and present HA-PPO to properly handle parameterized actions (e.g., turn (action) 30 degrees (parameter)) in PPO. The successful goal rate on one v.s. one task (agent v.s. the built-in goal keeper) is about 71% that is the best achieved by reinfoncement learning agents.

In reinforcement learning (RL), agents improve their ability through interaction with an environment. The goal of RL in games is to make good agents without giving domain knowledge. AlphaZero is such an application of RL into games.

Domains HFO Rogue, Catan, StarCraft II Exploration DEIR

AlphaZero Nakayashiki, T. and Kaneko, T. “Maximum entropy reinforcement learning in two-player perfect information games,” IEEE SSCI, pp. 1-8. 2021 doi 10.1109/SSCI50451.2021.9659991 Option/skill Kanagawa, Y. and T.

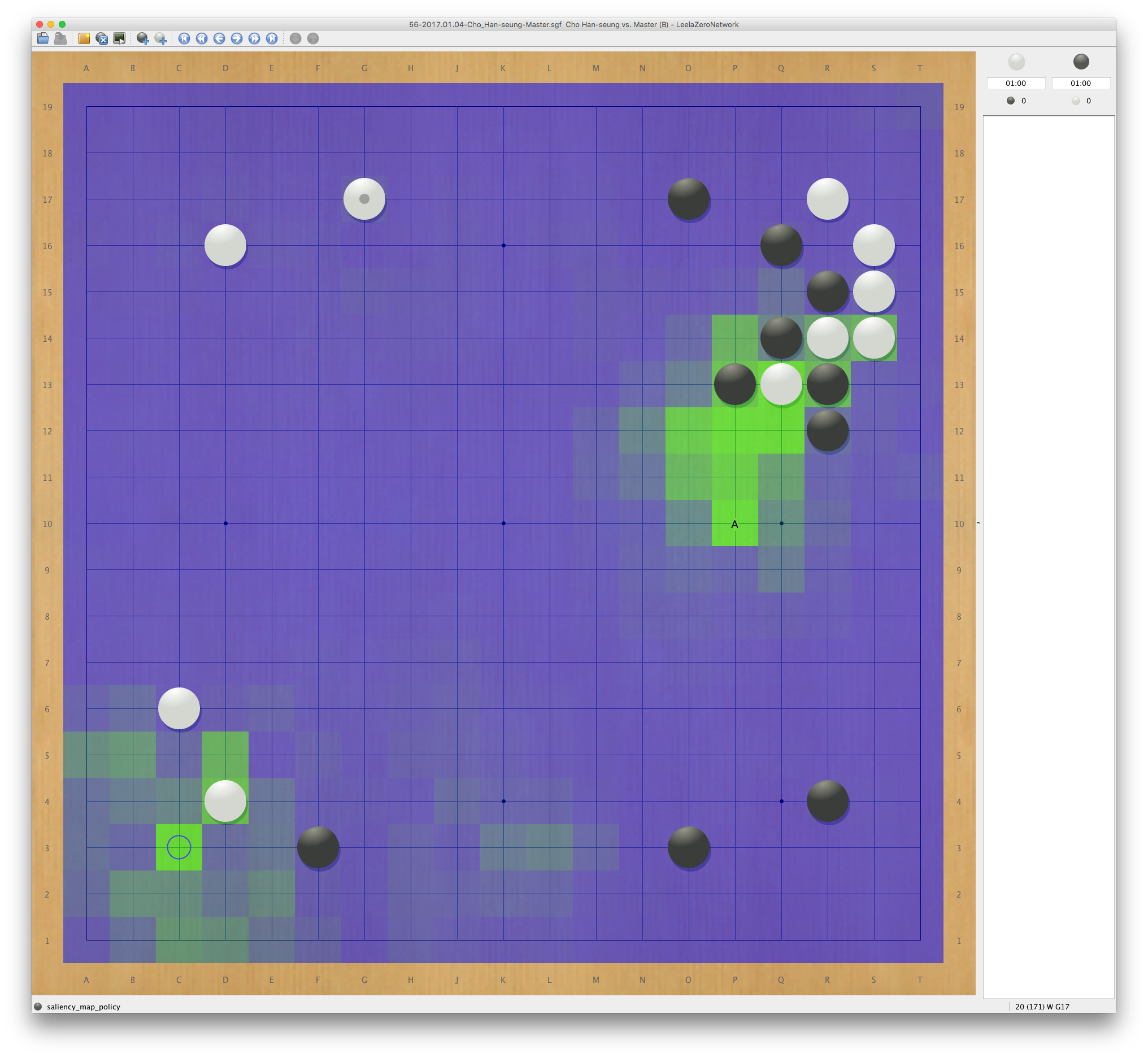

Computer Go.

Papers Mandai, Y. and T. Kaneko “RankNet for evaluation functions of the game of Go,” ICGA Jour- nal, Vol. 41, No. 2, pp. 78–91 (2019), DOI: 10.3233/ICG-190108. Evaluation of Game Tree Search Methods by Game Records Takeuchi, S.; Kaneko, T.; Yamaguchi, K.; IEEE Transactions on Computational Intelligence and AI in Games, 2 (4), 288 - 302, Dec. 2010. H. Yoshimoto, K. Yoshizoe, T. Kaneko, A. Kishimoto, and K. Taura: Monte Carlo Go Has a Way to Go, Twenty-First National Conference on Artificial Intelligence (AAAI-06), pages 1070-1075, 2006 Others https://github.

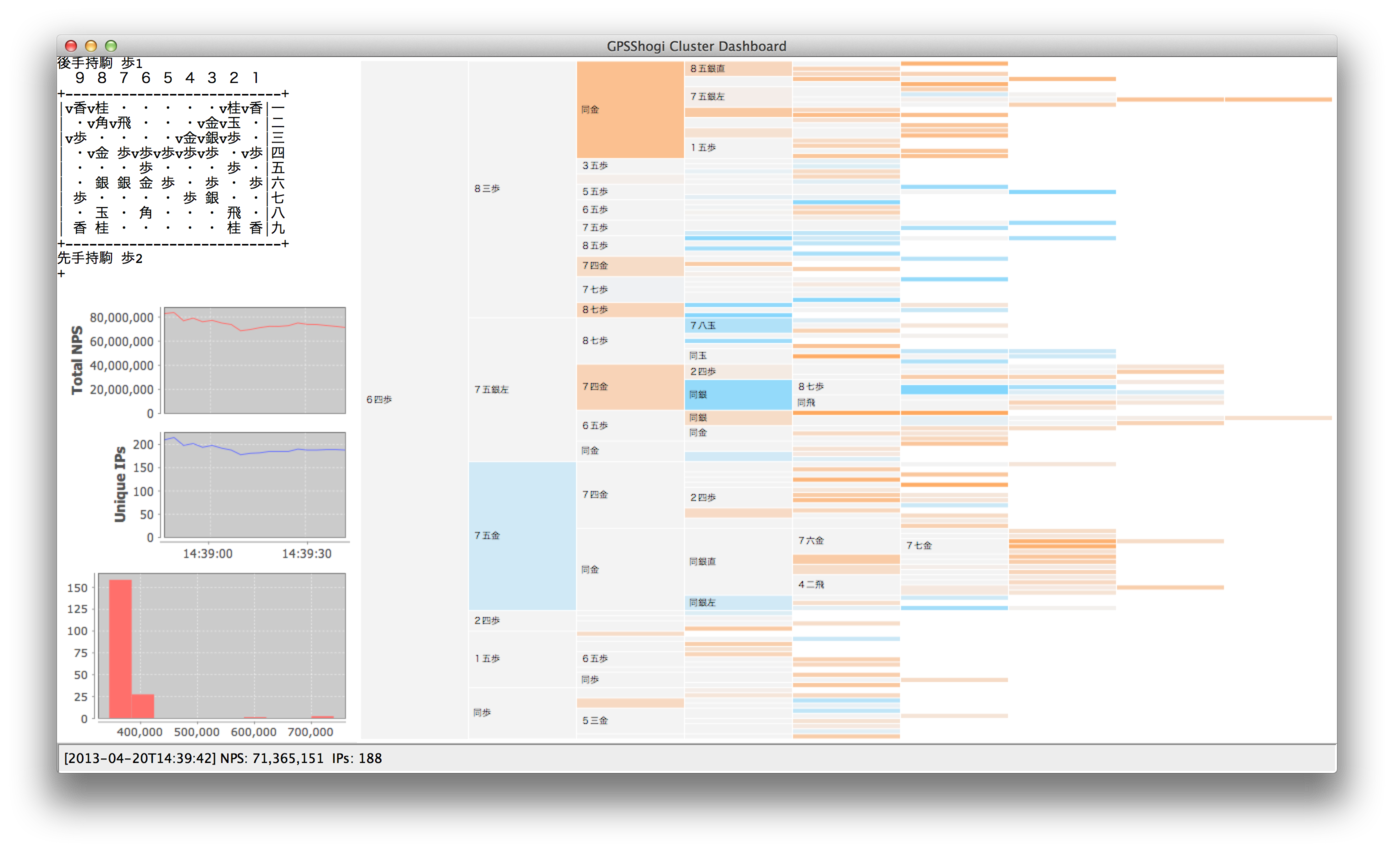

Parallel or distributed search S. Yokoyama, T. Kaneko, and T. Tetsuro: Parameter-Free Tree Style Pipeline in Asynchronous Parallel Game-Tree Search, The 14th International Conference on Advances in Computers and Games Scalable Distributed Monte-Carlo Tree Search. Kazuki Yoshizoe, Akihiro Kishimoto, Tomoyuki Kaneko, Haruhiro Yoshimoto and Yutaka Ishikawa. In Proceedings of the 4th Symposium on Combinatorial Search (SoCS'2011), pages 180-187, 2011 Monte-Carlo tree search Y. Mandai and T. Kaneko: LinUCB Applied to Monte Carlo Tree Search, Theoretical Computer Science.

Learning algorithms Kaneko, T. and T. Takizawa “Computer Shogi Tournaments and Techniques,” IEEE Transac- tions on Games, Vol. 11, No. 3, pp. 267–274 (2019), DOI: 10.1109/TG.2019.2939259. S. Wan and T. Kaneko. Heterogeneous Multi-Task Learning of Evaluation Functions for Chess and Shogi, ICONIP 2018. Wan, S.